前一阵子在刷抖音时,看到一个通过左右歪头选择两侧图片的视频,感觉很有趣。顿时想到了 n 年前的face-api.js,那就基于这个来做吧。总体做好后,有很多细节需要改进,不够细腻丝滑。

1. 需求分析

直接开搞吧!

- 页面基本布局,左右两侧图片,而且有缩放和移动动画

- 需要打开摄像头,获取视频流,通过 video 展现出来

- 需要检测人脸是向哪一侧歪头

2. 具体实现

2.1 页面布局和 animation 动画

这个不难,布局好后,就是添加 css 动画,我这里写的很粗糙,不细腻,但勉强能用,例如下面 leftHeartMove 为中间的小爱心向左侧移动动画

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

| .heart {

width: 30px;

height: 30px;

padding: 4px;

box-sizing: border-box;

border-radius: 50%;

background-color: #fff;

position: absolute;

top: -15px;

left: 50%;

transform: translateX(-50%) rotateZ(0deg) scale(1);

animation: leftHeartMove 0.5s linear;

animation-fill-mode: forwards;

z-index: 2;

}

@keyframes leftHeartMove {

from {

top: -15px;

left: 50%;

transform: translateX(-50%) rotateZ(0deg) scale(1);

}

to {

top: 65px;

left: -13%;

transform: translateX(-50%) rotateZ(-15deg) scale(1.2);

}

}

|

2.2 打开摄像头并显示

注意点

- 关于 h5

navigator.mediaDevices.getUserMedia 这个 api,本地开发localhost是可以拉起摄像头打开提示的,线上部署必须是https节点才行,http不能唤起打开摄像头

关于获取到视频流后,video视频播放,需要镜面翻转,这个可以通过 css 的transform: rotateY(180deg)来翻转

关于video播放不能在手机上竖屏全屏,可以给 video 设置 cssobject-fit:cover来充满屏幕

| <video id="video" class="video" playsinline autoplay muted></video>

|

| .video {

width: 100%;

height: 100%;

transform: rotateY(180deg);

object-fit: cover;

}

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

| async getUserMedia() {

if (navigator.mediaDevices && navigator.mediaDevices.getUserMedia) {

try {

const stream = await navigator.mediaDevices.getUserMedia({

audio: false,

video: true,

video: {

facingMode: "user",

width: { min: 1280, max: 1920 },

height: { min: 720, max: 1080 },

},

});

return Promise.resolve(stream);

} catch (error) {

return Promise.reject();

}

}

const errorMessage =

"This browser does not support video capture, or this device does not have a camera";

alert(errorMessage);

}

|

| async openCamera(e) {

try {

const stream = await this.getUserMedia();

this.video.srcObject = stream;

this.video.onloadedmetadata = async () => {

this.video.play();

};

} catch (error) {

console.log(error);

alert("打开摄像头失败");

}

}

|

| async closeCamera() {

const tracks = this.video.srcObject.getTracks();

tracks.forEach((track) => {

track.stop();

});

this.video.srcObject.srcObject = null;

}

|

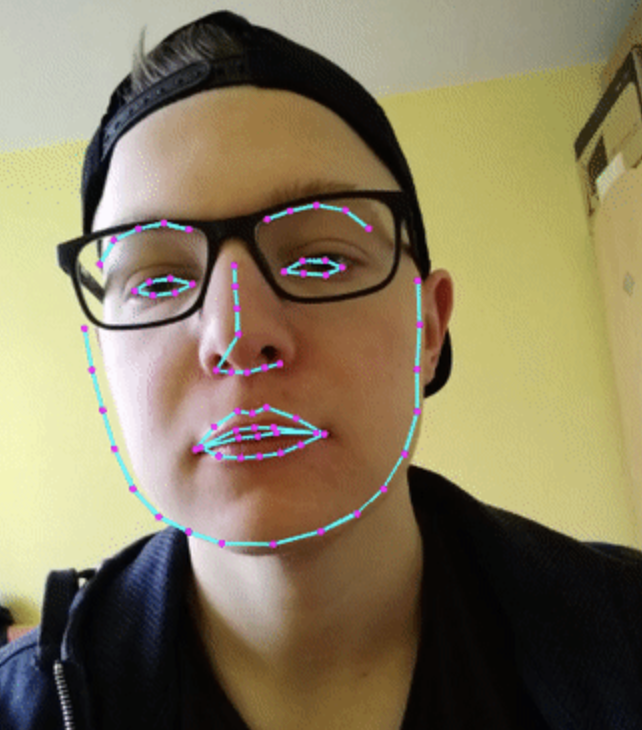

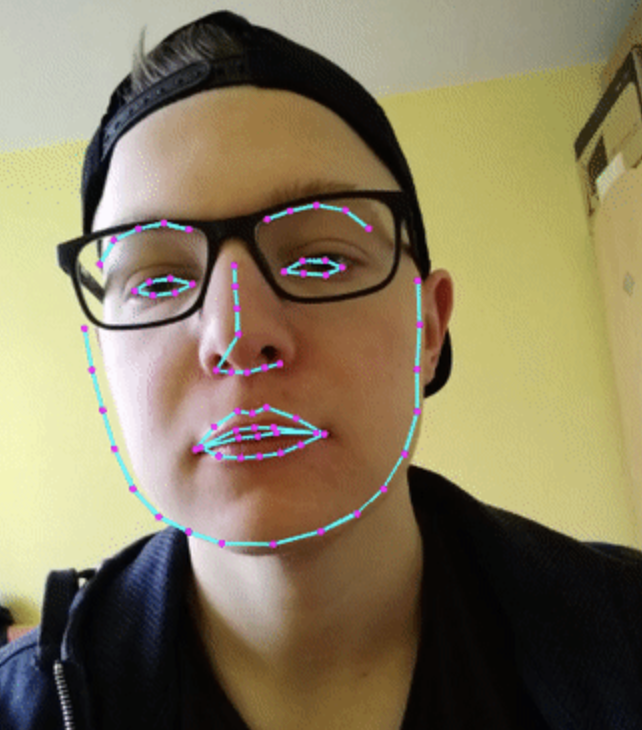

2.3 检测人脸左右倾斜

通过face-api.js拿到人脸landmarks特征数据后,可以直接拿到左右眼的数据,分别通过求 Y 轴方向的平均值,然后比较这个平均值,便可以简单得出人脸向左还是向右倾斜,简单吧,角度都不用求了!

| <div style="position: relative;width: 100%;height: 100%;">

<video

id="video"

class="video"

playsinline

autoplay

muted

style="object-fit:cover"

></video>

<canvas id="overlay" class="overlay"></canvas>

</div>

|

| .video {

width: 100%;

height: 100%;

position: absolute;

top: 0;

left: 0;

z-index: 0;

transform: rotateY(180deg);

}

.overlay {

position: absolute;

top: 0;

left: 0;

}

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

| import * as faceapi from "face-api.js";

async loadWeight() {

await faceapi.nets.ssdMobilenetv1.load(

"./static/weights/ssd_mobilenetv1_model-weights_manifest.json"

);

await faceapi.nets.faceLandmark68Net.load(

"./static/weights/face_landmark_68_model-weights_manifest.json"

);

await faceapi.nets.ageGenderNet.load(

"./static/weights/age_gender_model-weights_manifest.json"

);

console.log("模型加载完成");

}

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

| handleFaceLeftOrRight(landmarks) {

const DIFF_NUM = 15;

let leftEye = landmarks.getLeftEye();

let rightEye = landmarks.getRightEye();

let leftEyeSumPoint = leftEye.reduce((prev, cur) => ({

x: prev.x + cur.x,

y: prev.y + cur.y,

}));

let rightEyeSumPoint = rightEye.reduce((prev, cur) => ({

x: prev.x + cur.x,

y: prev.y + cur.y,

}));

let leftEyeAvgPoint = {

x: leftEyeSumPoint.x / leftEye.length,

y: leftEyeSumPoint.y / leftEye.length,

};

let rightEyeAvgPoint = {

x: rightEyeSumPoint.x / leftEye.length,

y: rightEyeSumPoint.y / leftEye.length,

};

let diff = Math.abs(leftEyeAvgPoint.y - rightEyeAvgPoint.y);

return diff > DIFF_NUM

? leftEyeAvgPoint.y > rightEyeAvgPoint.y

? "left"

: "right"

: "center";

}

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

| async handleVideoFaceTracking(cb) {

if (this.closed) {

window.cancelAnimationFrame(this.raf);

return;

}

const options = new faceapi.SsdMobilenetv1Options();

let task = faceapi.detectAllFaces(this.video, options);

task = task.withFaceLandmarks().withAgeAndGender();

const results = await task;

const dims = faceapi.matchDimensions(this.overlay, this.video, true);

const resizedResults = faceapi.resizeResults(results, dims);

cb && cb(resizedResults);

this.raf = requestAnimationFrame(() => this.handleVideoFaceTracking(cb));

}

|

3. 参考资料

face-api.js

getUserMedia MDN